An illuminating dive into the latest science on our brain’s remarkable learning abilities and the potential of the machines we program to imitate them

Continue Reading“Conscious”

The book “Conscious: by Annaka Harris is an ambitious undertaking in providing insights on how the conscious is integrated with matter. I find it as a brief and concise summary of very exciting subject.

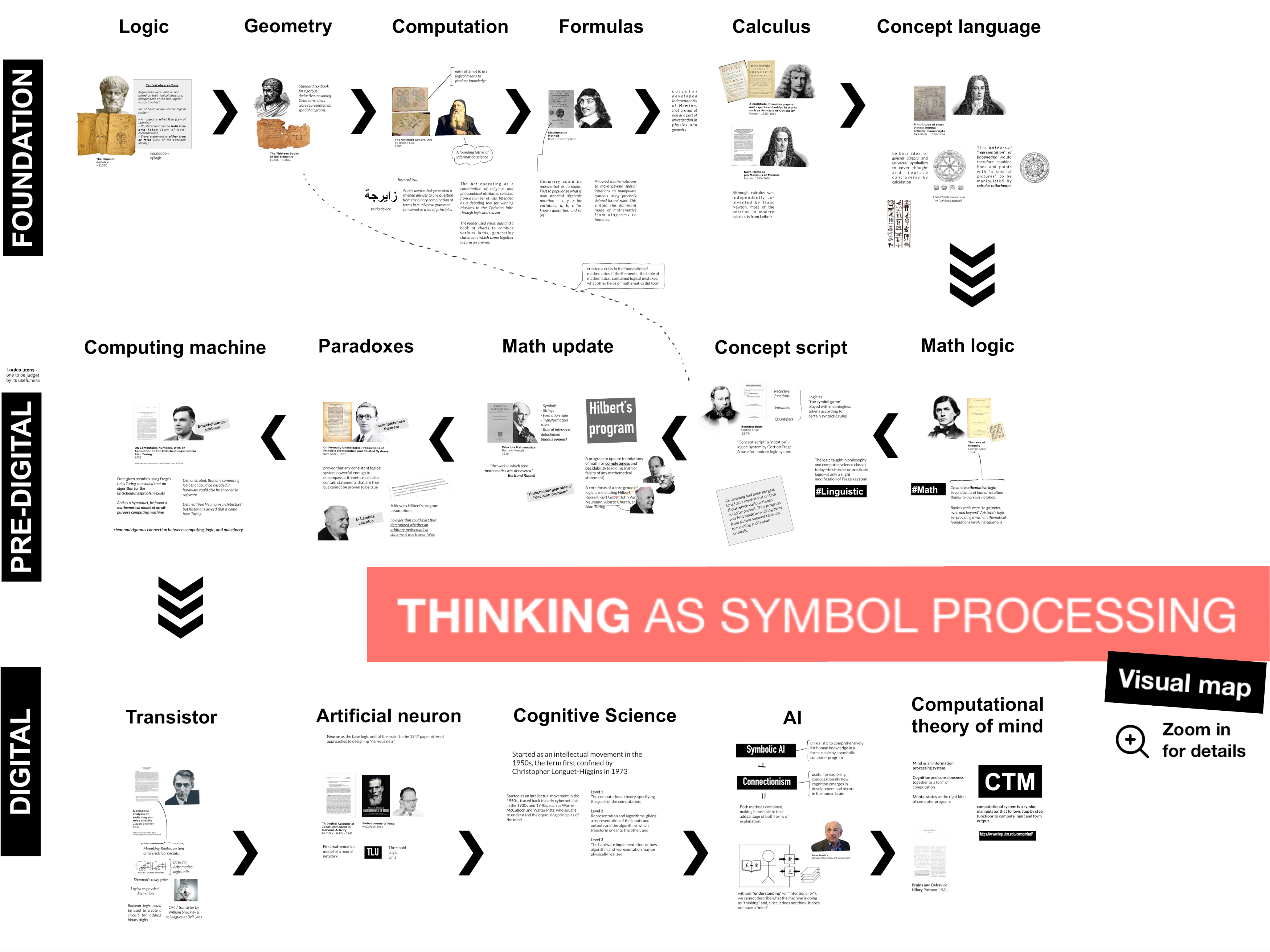

Continue ReadingA short history of thinking as symbol processing

What is this tradition of explaining thinking by representing the brain as a computer, an object grounded in the mathematical logic?

Continue Reading