AI Knowledge Acquisition

living idea

How knowledge can be attained by computer systems to assist humans?

Concepts ?

Resources ?

Filter 51 resources by topic

Books (25)

- Jeff Hawkins and his team discovered that the brain uses maplike structures to build a model of the world-not just one model, but hundreds of thousands of models of everything we know, and the origin of high-level thoughtMar 2, 2021

- How computer scientists and philosophers are defining the biggest question of our time - how will we create intelligent machines that will improve our lives rather than complicate or even destroy them?Oct 30, 2020

- An illuminating dive into the latest science on our brain's remarkable learning abilities and the potential of the machines we program to imitate themJan 11, 2020

- An insightful exploration of the relationship between technological advances and work, from preindustrial society through the Computer Revolution.Jun 18, 2019

- Superminds shows that instead of fearing the rise of artificial intelligence we should be focusing on what we can achieve by working with computers – because together we will change the world.May 15, 2019

- Intellectual impresario, John Brockman, assembles twenty-five of the most important scientific minds, for an unparalleled round-table examination about the mind, thinking, intelligence and what it means to be human.Feb 19, 2019

- The book delivers an abundance of information, insights, and counsel on, what Shoshana calls, the darkening of the digital age. It is about the challenges to humanity posed by the digital future, and un unprecedented new form of power.Jan 30, 2019

- On how China caught AI fever and implemented government goals (with benchmarks) for 2020, 2025 in an attempt to become the world center of AI innovation by 2030.Sep 25, 2018

- The subject of causation has preoccupied philosophers at least since Aristotle. The absence, however, of an accepted scientific approach to analyzing cause and effect is not merely of historical or theoretical interest. The book covers how understanding causality has revolutionized science so far and will revolutionize AI.May 15, 2018

- A practical guide for business leaders looking to get value from the adoption of machine learning technology.Apr 30, 2018

- An essential primer on a rapidly emerging Internet-of-Things concept, focusing on human-centric applications. An indispensable resource for researchers and app developers eager to explore HiTL concepts and include them in their designs.Feb 5, 2018

- A comprehensive overview of the entire field of Machine Learning that is better than most of the book on the topic. Author also explores an idea, related to his scientific research, of a master algorithm which could explain everything given enough data.Sep 22, 2015

- The first book to name, characterize and consolidate a wide array of current critical, theoretical, and philosophical approaches in decentering the human in favor of a concert for the nonhuman in the humanities and social sciences.Mar 9, 2015

- In the longer run biological human brains might cease to be the predominant nexus of Earthly intelligence. It is possible that one day we may be able to create ʺsuperintelligenceʺ: a general intelligence that vastly outperforms the best human brains in every significant cognitive domain.Jul 3, 2014

- The explanation of the technolgy revolution that is overturning the world’s economies.Jan 20, 2014

- On how the digital universe exploded in the aftermath of the WWII, the nature of digital computers, an how code took over the world by storm.Dec 9, 2012

- Jeff Hawkins (co-founder of Numenta) on what we know and don’t know about how the human brain works, explaining why computers are not intelligent and how, based on this new theory, we may build intelligent machines.Apr 1, 2007

- In one of the founding documents of the study of intelligent machines, The Human Use of Human Beings, Norbert Wiener does a remarkable job of identifying many of the most significant trends to ariseMar 22, 1988

- The book was a first systematic study of parallelism in computation - and remained a classical work on threshold automata networks for nearly two decades.Apr 17, 1969

- The book argues that tacit knowledge—tradition, inherited practices, implied values, and prejudgments—is a crucial part of scientific knowledge, making it an integral part of all knowledge... and on that is unable to access readily or to express precisely.May 1, 1967

- One of the greatest mathematicians of the twentieth century, John von Neumann, explores the analogies between computing machines and the living human brain.May 24, 1958

- The darkening of the digital age and arrival of new economic order.

- Will we all be better off having fully-evolved AI to our disposal? Or will it cause turmoil on a job market? Any serious exploration of such topics should begin with thorough read of this book.

- The wonderful essence of Tom’s book is to imagine how people and computers will interact on a massive scale to create intelligent systems.

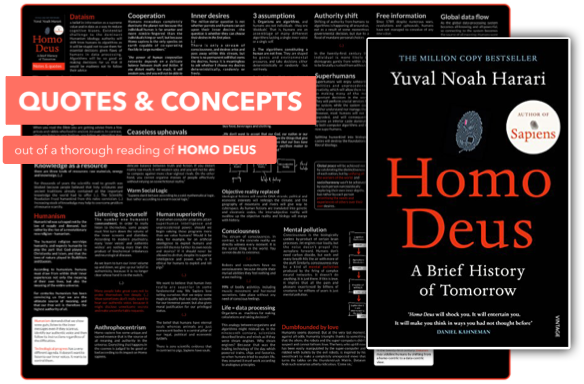

- Uncompromising exploration of the evolutionary concepts. Harrari attempts to illustrate the future of humanity and redefines meaning of 'Dataism' along the way.

Articles (6)

- To allow a neural net to process the symbols like a mathematician mathematical expressions were translated into more useful tree- forms. This process parallels how people solve integrals — and really all math problemsMay 22, 2020

- The conventional wisdom says we can expect a more centralized structure. The author says the conventional wisdom has it wrong.Apr 1, 2019

- What is this tradition of explaining thinking by representing the brain as a computer, an object grounded in the mathematical logic?Feb 15, 2019

- Why it is worth paying attention to the article of Erik Brynjolfsson and Tom Mitchell (Science Dec 22, 2018) and what it changes when discussing effects of AI on the job market.Jan 22, 2019

- Inquiry into what is missing for Artificial Intelligence to learn like a child. Article from Vol 360 of Science (May 2018, Issue 6391)Jul 8, 2018

- Inspired by Simon’s 1960 paper, article weaves many other strands into the tapestry, from classical discussions of the division of labor to present-day evolutionary psychology.Sep 9, 2002

Scientific Papers (15)

- The article builds a bridge between the Gestalt principles in human perception (describing how visual elements are interpreted) and convolutional neural networks modeling. It suggests that a single principle - adoption to the statistical structure of the environment might suffice to explain perceptual mechanisms.Apr 9, 2021

- A multi-modal framework that integrates a spatial-aware self-attention mechanism into the Transformer architectureDec 29, 2020

- A new language representation model called BERT: Bidirectional Encoder Representations from Transformers.BERT is conceptually simple and empirically powerful. It obtains new state-of-the-art results on eleven natural language processing tasksOct 11, 2018

- In this paper, DeepLayout, a new approach to page layout analysis is presented. Previous work divides the problem into unsupervised segmentation and classification. Instead of a step-wise method, we adopt semantic segmentation which is an end-to-end trainable deep neural network. Our proposed segmenta- tion model takes only document image as input and predicts per pixel saliency maps.Jul 23, 2018

- This paper is a first step in developing a conceptual framework to study how machines replace human labor and why this might (or might not) lead to lower employment and stagnant wages.Jun 14, 2018

- The method to map unaligned videos from multiple sources to a common representation using self-supervised objectives constructed over both time and modality (i.e. vision and sound). The embedding of YouTube videos to construct a reward function that encourages an agent to imitate human gameplayMay 29, 2018

- The article puts forward ULMFiT, effective transfer learning method that can be applied to any task in NLP. With only 100 labeled examples, it matches the performance of training from scratch on 100x more data and reduces error by 18-24% on the state-of-the-art on six text classification tasksMay 23, 2018

- Semi-supervised learning (SSL) based on deep neural networks have recently proven successful on standard benchmark tasks. Baselines which do not use unlabeled data is often underreported, SSL methods differ in sensitivity to the amount of labeled and unlabeled data, and performance can degrade substantially when the unlabeled dataset contains out-of- distribution examples. To help guide SSL research towards real-world applicability, we make our unified reimplemention and evaluation platform publicly available.Apr 24, 2018

- Articles proposes a new simple network architecture, the Transformer, based solely on attention mechanisms, dispensing with recurrence and convolutions entirely. The model achieves 28.4 BLEU on the WMT 2014 English- to-German translation task, improving over the existing best results, including ensembles, by over 2 BLEU. The Transformer also generalizes well to other tasks.Dec 15, 2017

- A general framework for few-shot learning. The method, called the Relation Network (RN), is trained end-to-end from scratch. Besides providing improved performance on few-shot learning, our framework is easily extended to zero-shot learning.Nov 16, 2017

- Popular models that learn word representations ignore the morphology of words, by assigning a distinct vector to each word. Article proposes a new approach based on the skipgram model, where each word is represented as a bag of character n-grams. A vector representation is associated to each character n-gram; words being represented as the sum of these representations.Jun 19, 2017

- Deeper neural networks are more difficult to train. We present a residual learning framework to ease the training of networks that are substantially deeper than those used previously. We explicitly reformulate the layers as learning residual functions with reference to the layer inputs, instead of learning unreferenced functions. Solely due to our extremely deep representations, we obtain a 28% relative improvement on the COCO object detection dataset.Dec 10, 2015

- The research of the reasons why automation has not wiped out a majority of jobs over the decades and centuries. How recent and future advances in artificial intelligence and robotics should shape our thinking about the likely trajectory of occupational change and employment growthJun 10, 2015

- An inherent limitation of word representations is their indifference to word order and their inability to represent idiomatic phrases. Article presents a simple method for finding phrases in text, and show that learning good vector representations for millions of phrases is possible.Oct 16, 2013

- Demonstration of cross modality feature learning, where better features for one modality (e.g., video) can be learned if multiple modalities (e.g., audio and video) are present at feature learning time.Jan 1, 2011