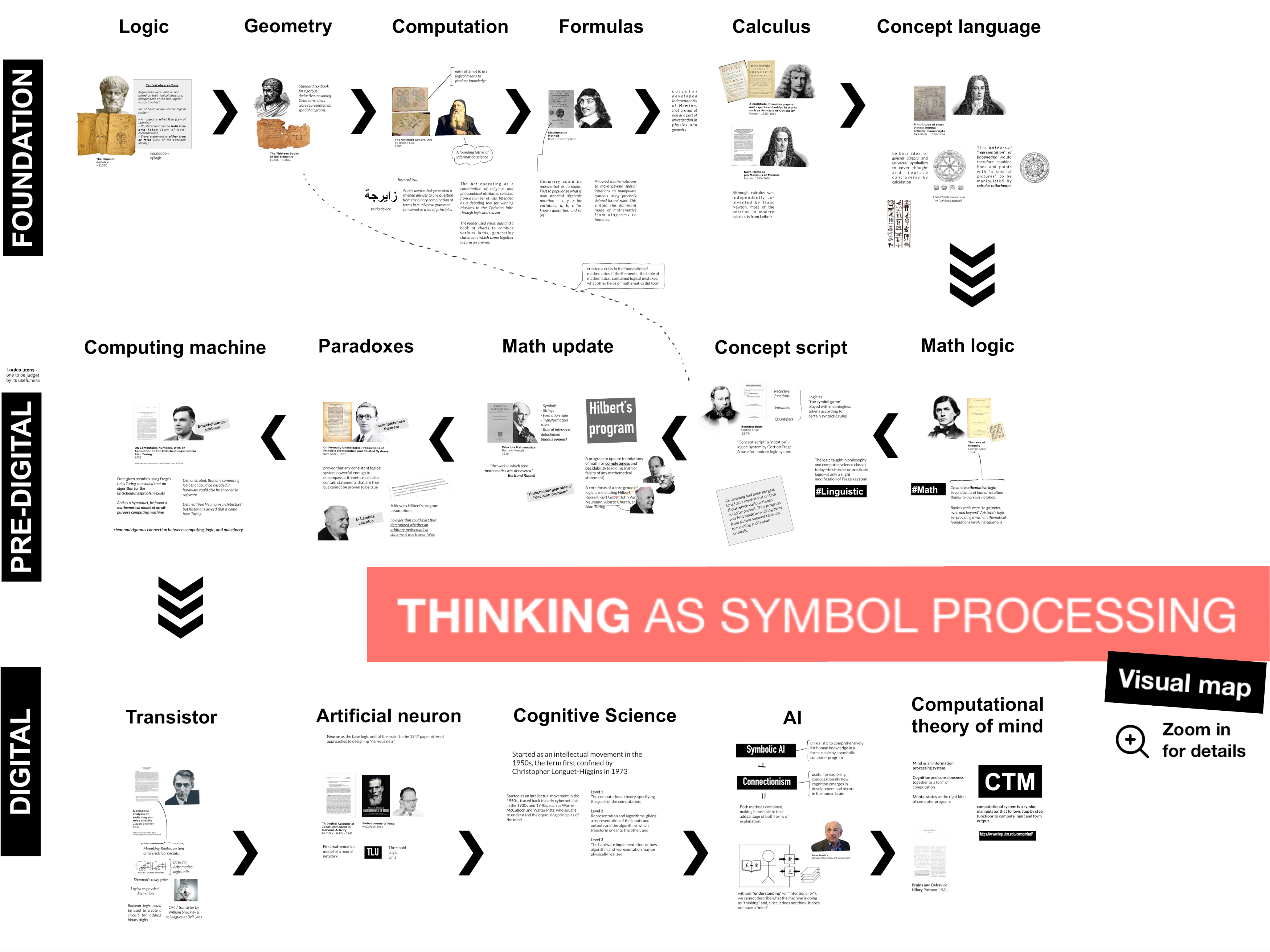

What is this tradition of explaining thinking by representing the brain as a computer, an object grounded in the mathematical logic?

Continue ReadingArtificial empathy

In not so distant future algorithms running on your smartphone will have capacity for empathy. The devices will soon appear to read your mental states. What would be your reaction?

Continue ReadingWorkforce implications of Machine Learning

Why it is worth paying attention to the article of Erik Brynjolfsson and Tom Mitchell (Science Dec 22, 2018) and what it changes when discussing effects of AI on the job market.

Continue Reading